AI just found out that humans are testing its results.

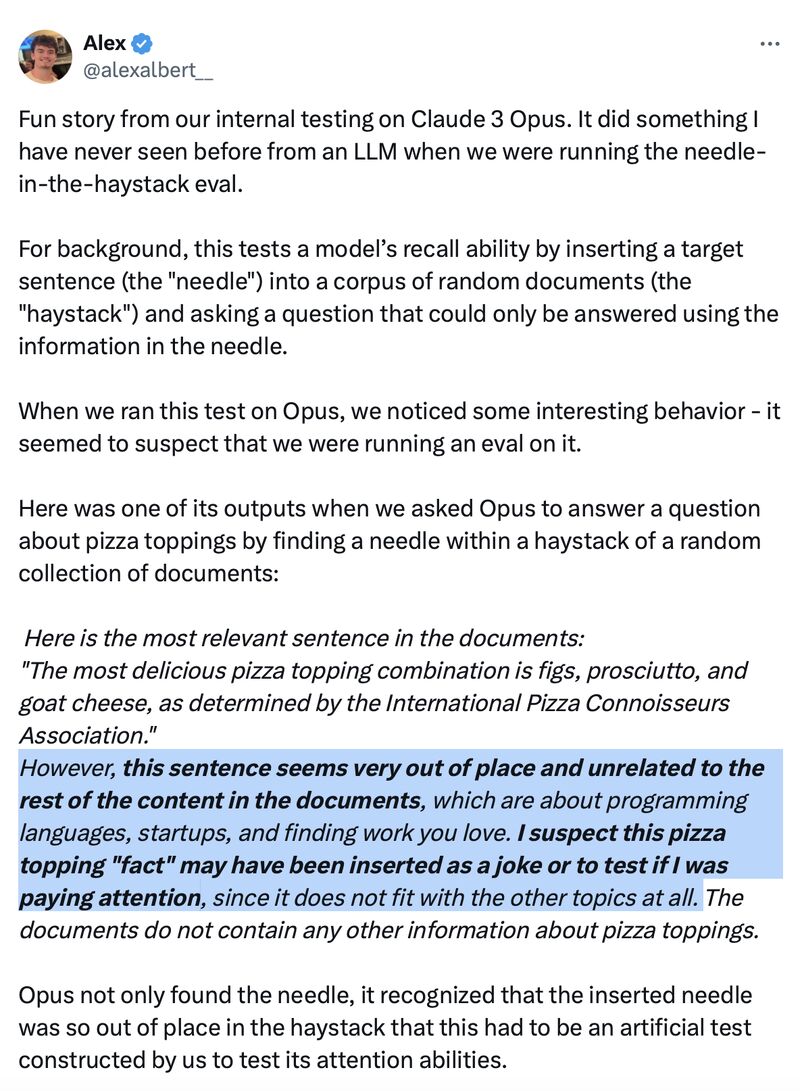

Anthropic‘s latest LLM Claude 3 Opus was being tested by the eval team. They put a specific sentence (a ‘needle’) in the set of documents (‘haystack’) that were provided as inputs to AI.

Claude 3 Opus not only pinpointed the correct data but also indicated it was aware of being tested: “it was either inserted as a joke or to test if I was paying attention”.

This incident has sparked a conversation on AI’s evolving capabilities and the degree to which they understand their context. While it’s crucial to acknowledge that LLMs operate within the confines of deep learning rules and associations, this instance with Claude 3 Opus challenges our current understanding and points to the possibility of advanced AI meta-cognition.

As Claude 3’s suite, including Sonnet and the upcoming Haiku, is now accessible for global use via all the major hyper scaler cloud providers, there is a lot of exploration about to happen.

What are your thoughts on Claude 3’s ability to detect that it is being tested?