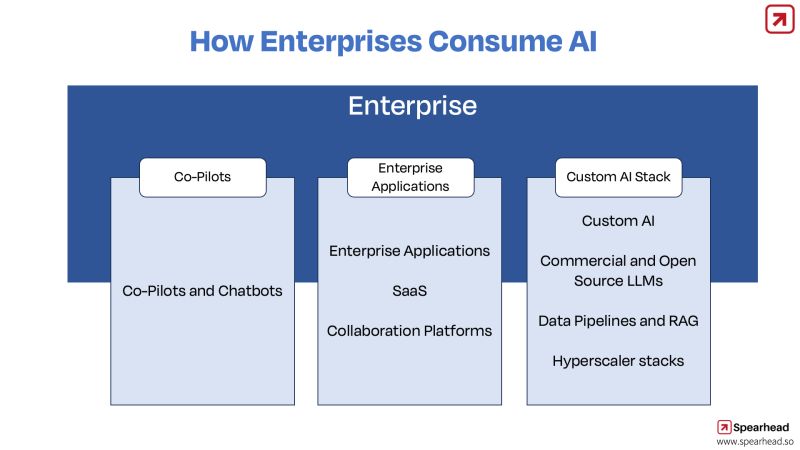

How are enterprises adopting and consuming AI?

Here is a framework to understand the consumption of AI in the enterprise.

At the foundational level, Co-Pilots and Chatbots are the initial AI interactions and workflows, serving as frontline AI applications that enhance productivity and customer engagement. These are AI co-pilot and chatbot products from usual suspects: Anthropic, Microsoft, Google and others.

Next up, Enterprise Applications, from in-house solutions to SaaS and Collaboration Platforms, now have embedded AI capabilities to drive smarter workflows, analytics, notifications and decision-making processes. These are traditional enterprise applications and SaaS players ranging from Atlassian, Salesforce, to Workday, and their peers.

For organizations seeking a more tranformative approach, building a Custom AI Stack is becoming increasingly prevalent. This includes Commercial and Open Source LLMs (Large Language Models), which are providing unparalleled customization in AI applications. Data Pipelines and RAG (Retrieval-Augmented Generation) systems are vital for managing the vast inflow of data, while Hyperscaler Stacks ensure scalability and robust infrastructure. There is a ton of players in this space as opportunities abound, ranging from OpenAI to Mistral AI, and hyperscalers like Amazon Web Services (AWS), Microsoft Azure, and Google Cloud.

Each layer of this model represents a step towards AI maturity, from basic automation to strategic AI-driven innovation. It’s a pathway that businesses are navigating with keen investment, reshaping industry paradigms and redefining what’s possible.

What are your thoughts on enterprise AI adoption?

#GenAI #AIAdoption #EnterpriseTechnology #ArtificialIntelligence #BusinessStrategy #Innovation