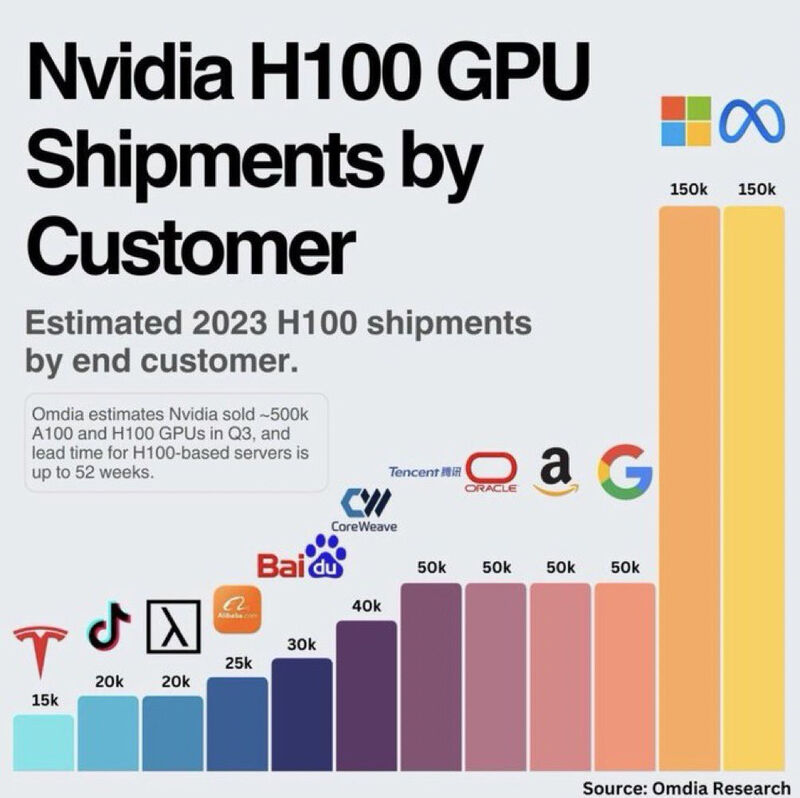

Only a select set of companies have a large share of NVIDIA‘s H100 chip orders.

H100 is the ninth generation of Nvidia’s data center GPU. The device is equipped with more Tensor and CUDA cores, and at higher clock speeds, than the A100. There’s 50MB of Level 2 cache and 80GB of familiar HBM3 memory, but at twice the bandwidth of the predecessor – reaching 3 TB/sec.

H100 is the premier AI chip in the market and it is highly coveted for its performance: it promises up to 9x faster AI training and up to 30x faster AI inference in popular machine learning models over the previous generation’s A100, released just two years ago. The lead time of H100-based servers is from 36 to 52 weeks.

Omdia, a market tracking company, believes that Meta and Microsoft are the largest purchasers of Nvidia’s H100 GPUs. They procured as many as 150,000 H100 GPUs each, considerably more than the number of H100 processors purchased by Google, Amazon, Oracle, and Tencent (50,000 each). It is noteworthy that the majority of server GPUs are supplied to hyperscale cloud service providers. Server OEMs (Dell Technologies, Lenovo, Hewlett Packard Enterprise) cannot get enough AI and HPC GPUs to fulfill their server orders yet.

That being said, most hyperscalers are now creating their own chips: custom silicon for AI, High Performance Compute, and video workloads. The customized server market is estimated to be $196B by 2027.

This inclination towards custom, application-optimized server configurations is set to become the norm as the cost-efficiency of building specialized processors is realized, with media and AI being the current front runners and other sectors like database management and web services expected to join the movement.

What are your thoughts on companies’ appetite for AI chips like H100?

#Nvidia #AIInfrastructure #ServerInnovation #ProprietaryTechnology #FutureOfComputing #h100 #generativeai

Data: Omdia, Data Center Knowledge, Yahoo Finance.