GPUs are better than CPUs for AI.

Here is why.

In the realm of AI, computation power matters.

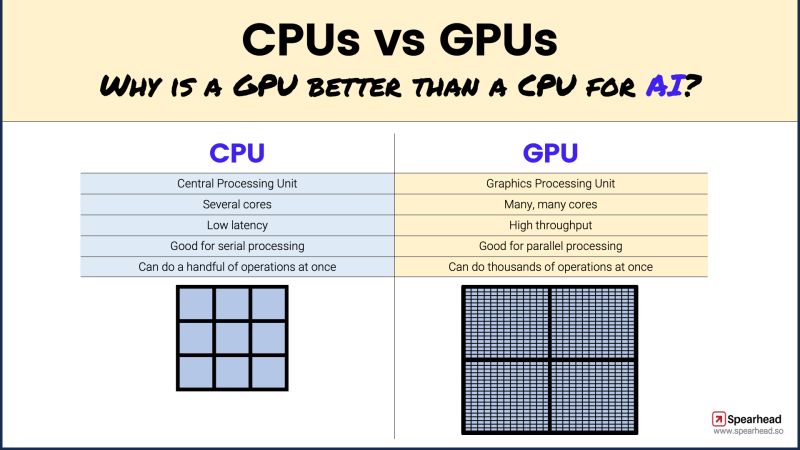

CPUs, with a few cores, are designed for sequential serial processing, making them great for tasks that require high single-threaded performance.

However, AI is a different ballgame.

AI demands high parallelism, and this is where GPUs shine.

They have hundreds, even thousands, of cores that are optimized for parallel processing.

This means that while a CPU might be executing a few instructions or software threads at a time, a GPU could be performing hundreds or even thousands of operations simultaneously.

This ability of GPUs to handle smaller tasks in parallel gives them a significant edge in processing AI tasks, which often involve large amounts of data that need to be processed simultaneously. Though there are specific use cases where CPUs are optimized for efficient computations.

As we continue to push the adoption of AI and machine learning, we will see continues need for more GPUs. Until there is a better or different AI architecture.

What are your thoughts on GPUs vs CPUs?

#generativeai #AI #GPUs